Language processing and language in the brain (psycholinguistics and computational linguistics)

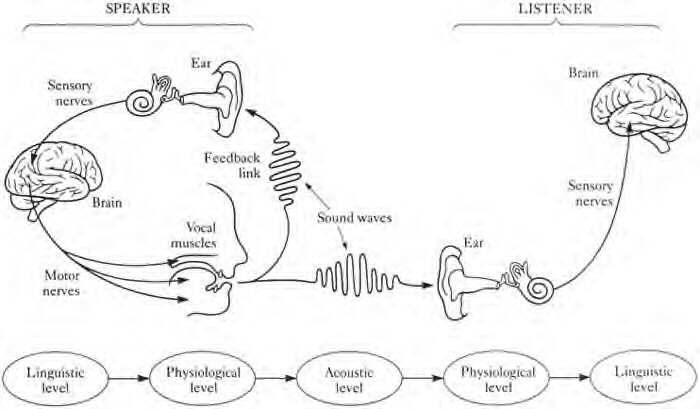

A model of linguistic communication

We start with a simple -- perhaps simplistic -- picture of speech communication, which we've seen before.

- a person has an idea she wishes to communicate

- she puts it into words and utters them

- another person hears the sound, recognizes the words, and grasps the speaker's intent

It is worth noting that several aspects of this picture are controversial. Some philosophers doubt that meanings are things that can be put into one-to-one correspondence with phrases of ordinary language, or can ever be said to be fully shared between two people, or even are well-defined things at all. Some social scientists observe that most linguistic communication is a cooperative process that is not well modeled by making the speaker entirely the active creator of a message, and the listener entirely its passive recipient.

Nevertheless, there are many circumstances where this perspective gives a useful common-sense framework, and it lies behind most research on speaking and understanding speech. Although we are all able to speak and to understand, we have no conscious access to the many complex neurophysiological processes that underlie these abilities, and so an experimental approach is necessary.

In this lecture we'll be concentrating less on the nature of linguistic elements themselves -- sounds, morphological and semantic structures etc. -- and more on how they are dealt with in the mind and the brain. That is, we'll be looking at cognitive structures and processing of linguistic material in the mind, and also at how these cognitive structures are realized in the physical human brain. We'll begin with the mind half, and then move on to the brain.

Psycholinguistics

Linguists and psycholinguists have looked at many aspects of the production and perception of spoken language, too many to do more than list in a single lecture. So, as has been our practice in previous lectures, rather than give a whirlwind tour of issues and techniques, we'll look in a bit of detail at two kinds of studies -- those that use speech errors to learn about language production, and those that look at the time course of spoken word recognition. Keep in mind that these are two small parts of a very large and interesting picture, about which you can learn much more by taking a course in psychology of language.

A window on language generation: slips of the tongue and pen.

In figuring out how the mind works, one standard line of inquiry is to look at how it fails. This approach was first taken to the problem of speech generation by Sigmund Freud, in his 1901 work The Psychopathology of Everyday Life.

Freud focused on the substitution of words either in speech (lapsus linguae, slips of the tongue) or in writing (lapsus calami, slips of the pen). The substitution is contrary to the conscious wishes of the person speaking or writing, and in fact sometimes is subversive of these wishes. The speaker or writer may be unaware of the error, and may be embarrassed when the error is pointed out. Freud believed that such "slips" come from repressed, unconscious desires.

Freud's general term for such errors was "faulty performance (Fehleistung)," which has been translated as the pseudo-Greek scientism parapraxis. The colloquial label is "Freudian slip."

In Freud's analysis, a slip of the tongue is a form of self-betrayal. Here are a few of the examples he cited.

- The President of the Austrian Parliament said "I take notice that a full quorum of members is present and herewith declare the sitting closed!"

- The hotel boy who, knocking at the bishop's door, nervously replied to the question "Who is it?" "The Lord, my boy!"

- A member of the British House of Commons referred to another as the honourable member for "Central Hell," instead of "Hull."

- A professor says, "In the case of the female genital, in spite of the tempting ... I mean, the attempted ..... "

- When a lady, appearing to compliment another, says "I am sure you must have thrown this delightful hat together" instead of "sewn it together", no scientific theories in the world can prevent us from seeing in her slip the thought that the hat is an amateur production. Or when a lady who is well known for her determined character says: "My husband asked his doctor what sort of diet ought to be provided for him. But the doctor said he needed no special diet, he could eat and drink whatever I choose", the slip appears clearly as the unmistakable expression of a consistent scheme.

- Slips of the tongue often give this impression of abbreviation; for instance, when a professor of anatomy at the end of his lecture on the nasal cavities asks whether his class has thoroughly understood it and, after a general reply in the affirmative, goes on to say: "I can hardly believe that this is so, since persons who can thoroughly understand the nasal cavities can be counted, even in a city of millions, on one finger ... I mean, on the fingers of one hand." The abbreviated sentence has its own meaning: it says that there is only one person who understands the subject.

This cartoon expresses the sense of a slip of the tongue as a betrayal of inner thoughts.

The cartoon has a few other linguistic aspects. It would be irresponsible for us not to point out that the pilgrim's use of archaic verb forms is entirely bogus. The /-st/ ending in didst is the archaic second person singular past, whereas the context calls for third person singular past; and goeth is archaically inflected for third person singular present, rather than being the bare verb stem as required by the where did X go? construction.

Bogus archaisms aside, the cartoon presents an accurate picture of Freud's idea of how slips of the tongue arise.

There has been quite a bit of research on slips of the tongue since 1901, and (to the extent that Freud's theory is susceptible of empirical test) this research tends to undermine Freud's conception, and to substitute another one. The characteristics of slips are the result of the information-processing requirements of producing language. If this theory is correct, then slips tell us much less than Freud thought about unconscious intentions, and much more about language structure and use.

Linguistic theory tells us that there is a hierarchy of units below the level of the sentence: phrase, word, morpheme, syllable, syllable-part (such as onset or rhyme), phoneme, phonological feature. Slips can occur at each of these levels. In addition, slips can be of several types: substitution (of one element for another of the same type), exchange (of two elements of the same type within an utterance), shift (of an element from one place to another within the utterance), perseveration (re-use of an element a second time, after the 'correct' use), anticipation (re-use of an element, before the 'correct' use).

In a review article entitled Speaking and Misspeaking (published in Gleitman and Liberman, Eds., An Invitation to Cognitive Science), Gary Dell gives the following made-up examples, all related to the target utterance "I wanted to read the letter to my grandmother."

- phrase (exchange): "I wanted to read my grandmother to the letter."

- word (substitution): "I wanted to read the envelope to my grandmother."

- inflectional morpheme (shift): "I want to readed the letter to my grandmother."

- stem morpheme (exchange): "I readed to want the letter to my grandmother."

- syllable onset (anticipation): "I wanted to read the gretter to my grandmother."

- phonological feature (anticipation or perseveration): "I wanted to read the letter to my brandmother."

Why should mistakes of these kinds occur? The basic facts of the case suggest the reason: talking is a hard thing to do! In fact, fluent speech articulation has been called our most complex motor skill.

Language is a complex and hierarchical system. Language use is creative, so that each new utterance is put together on the spot out of the pieces made available by the language being spoken. A speaker is under time pressure, typically choosing about three words per second out of a vocabulary of 40,000 or more, while at the same time producing perhaps five syllables and a dozen phonemes per second, using more than 100 finely-coordinated muscles, none of which has a maximum gestural repetition rate or more than about three cycles per second. Word choices are being made, and sentences constructed, at the same time that earlier parts of the same phrase are being spoken.

Given the complexities of speaking, it's not surprising that about one slip of the tongue on average occurs per thousand words said. In fact, perhaps it is surprising that more of us are not like Mrs. Malaprop or Dr. Spooner.

Mrs. Malaprop was a character Richard Brinsley Sheridan's play "The Rivals" (1775), who used words "mal a propos", French for "out of place". Some of her usages were "She's as headstrong as an allegory on the banks of the Nile" (alligator, although crocodile would be more correct); "Comparisons are odorous" (odious); "...you will promise to forget this fellow -- to illiterate him, I say, quite from your memory" (obliterate or perhaps eradicate); "He is the very pineapple of politeness" (for pinnacle).

Some of Yogi Berra's witticisms owe something to Mrs. Malaprop: "I just want to thank everyone who made this day necessary" (possible); "Even Napoleon had his Watergate" (Waterloo).

Spooner was a real historical figure -- the Reverend William A. Spooner, Dean and Warden of New College, Oxford, during Victoria's reign -- whose alleged propensity for exchange errors gave the name of spoonerism to this class of speech error. The term came into general use within his lifetime. Some of the exchanges attributed (apocryphally) to him are:

- Work is the curse of the drinking

classes.

...noble tons of soil... (noble sons of toil)

You have tasted the whole worm. (wasted the whole term)

I have in my bosom a half-warmed fish. (half-formed wish)

...queer old dean... (dear old queen, referring to Queen Victoria).

The New College home page does admit that he once announced a hymn as 'Kinquering kongs their tikles tate'. He is said to have been a "lucid and gifted conversationalist," though perhaps a bit scatterbrained in general. There is said to be good authority for his asking an undergraduate he came across in the quad - 'Now let me see. Was it you or your brother who was killed in the war?' and his invitation to a young don: 'Do come to dinner tonight. We have that new Fellow, Casson, coming.' 'But Warden, I am Casson.' 'Oh, well. Never mind. Come anyway.'

Linguists have accumulated large collections of speech errors, and used the statistical distribution of such errors to evaluate models of linguistic structure and the process of speaking. For instance, the distribution of unit sizes in a corpus of exchanges, reproduced below, has been argued to tell us that words, morphemes and phonemes are especially important units in the process of speaking, because these are the levels at which the most errors occur:

Many other details of the distribution of speech errors are also revealing. For example, word-level slips of all kinds obey the syntactic category rule: the target (i.e. the word replaced) and the substituting word are almost always of the same syntactic category. Nouns replace nouns, verbs replace verbs, and so on.

The syntactic category rule is by far the strongest influence on word-level errors. There are other influences -- for instances, the substituting word tends to be related in meaning and in sound to the target -- but these are generally less strong.

When the substituting word comes completely from outside the utterance -- rather than being an exchange of words or an anticipation or perseveration of words within the utterance -- this is called a "non-contextual word substitution." In such cases, it is common for the substitute and the target to be semantically and pragmatically similar. For instance, U.S. President Gerald Ford once toasted Egyptian President Anwar Sadat "and the great people of Israel -- Egypt, excuse me," and we can probably all recall making very similar mistakes ourselves. However, even in such non-contextual word substitutions, there are other influences besides semantic similarity, such as association with nearby words, or similarity in pronunciation. For example, in one of the speech-error corpora, a speaker refers to "Lizst's second Hungarian restaurant" instead of "Lizst's second Hungarian rhapsody." Restaurant and rhapsody are not particularly similar in meaning, but both are associated with Hungarian -- and both are three-syllable words with initial stress that start with /r/.

Note that in this case, as in nearly all such cases, the syntactic category rule is obeyed -- restaurant and rhapsody are both nouns.

There is a large scientific literature in which linguists and psycholinguists examine numerous detailed properties of speech error corpora. One general observation that emerges is that Freud's cited examples are atypical. There are very few naturally-occurring speech errors in which one can see any evidence of repressed fears or desires, either in motivating the use of the incorrect utterance or the avoidance of the correct one. Some observed errors are:

- The shirts fall off his buttons (buttons fall off his shirts)

- The ricious vat (vicious rat)

- A curl galled her up (girl called)

- ...sissle theeds... (thistle seeds)

- My jears are gammed (gears are jammed)

- ...by the sery vame... (very same)

- This is the most lescent risting (recent listing)

- Did the grass clack? (glass crack)

- I have a stick neff (stiff neck)

- is noth wort knowing (not worth)

A more parsimonious theory would be that speech errors occur simply because talking is a cognitively complex and difficult task, with many opportunities for mixups in memory and execution. This does not exclude examples of the kind that interested Freud -- a substituted word might be "primed" by association with unexpressed wishes or fears, or exempted from normal "editing" processes by the same associations.

In fact, Motley (1980) was able to create "Freudian slip" effects of this kind in the experimental induction of speech errors. He used one of the standard techniques for inducing phonemic exchange errors, which works as follows. The subject is asked to read a list of word pairs such as "dart board." Some of these are target pairs, in which the experimental hopes to induce an error, and some are bias pairs. A "bias pair" has something in common (say initial phonemes) with the desired error. Three bias pairs precede every target pair. A sample of sequence of this kind is:

- dart

board

(bias pair)

dust bin (bias pair)

duck bill (bias pair)

barn door (target pair: --> darn bore?)

Under these conditions, subjects produce about 10-15% spoonerisms on the target items. The experimenter can then systematically examine the factors that make errors more or less likely. For instance, errors are generally more likely when the results are real words (barn door --> darn bore) than when the results are not (born dancer --> dorn bancer), and more likely when the rest of the target words are phonologically similar (e.g. we get left hemisphere --> heft lemisphere, where the same vowel follows, more frequently than right hemisphere --> hight remisphere, where different vowels follow).

In Motley's 1980 experiment, he used manipulation of the experimental context as the independent variable. The subjects were male undergraduates, and the context was either electrical or sexual. In the "electrical" context, the subjects were attached to (fake) electrodes and told that mild shocks would be administered if they performed badly. In the "sexual" context, the test was administered by a provocatively-dressed and conventionally attractive female experimenter (it's not clear if subjects' sexual preference was controlled for).

Motley then looked at the likelihood of errors whose output has electrical associations (as in the case of the word pair shad bock), as opposed to sexual ones (as in the word pair tool kits). He found that errors tended to correspond to the contextual conditions: in the electrical context, electrical errors were more common, while in the sexual condition, sexual errors were more common.

Motley's results show that genuinely Freudian slips -- errors that reveal unexpressed thoughts -- do happen. At least, slips of the tongue can be primed or biased in the direction of topics or concepts that are on the speaker's mind. However, the same experiment shows that it is easy to cause slips of the tongue for purely phonological reasons, without any semantic or even lexical priming. We can conclude that many speech errors -- perhaps most speech errors -- do not reveal the speaker's secret fears and desires, but rather the innocent (if still hidden) properties of his or her language production system.

Speech non-errors: l'art du contrepet

The French, subtle as always, have for several centuries practiced a form of linguistic joke called the contrepet, which is a sort of Freudian slip waiting to happen. The joke occurs in the form of a phrase, itself innocuous and plausible, which if it were to be subjected to a particular exchange (of phonemes, syllables or words), would become obscene and scurrilous. For example:

- Le pape ne veut pas qu'on tue.

This means "the Pope does not want one to kill" -- reasonable enough. If the initial consonants of the last two words are swapped, however, then the phrase becomes suitably obscene and scurrilous.

A few examples are more disrespectful rather than obscene, such as this one:

- Il y a deux espèces de gendarmes, les courts et

les longs.

The meaning is "there are two kinds of policemen, short ones and long ones." If the initial consonants of courts "short" and longs "long" are swapped, the result would be spelled "les lourds et les cons", and means "there are two kinds of policemen, heavy ones and stupid ones."

The word contrepet means "counter-flatulence", the idea apparently being that the hidden "counter" phrase emerges like passing gas. The prestigious series Le Livre de Poche has published a volume on this topic by one Luc Etienne, entitled L'Art du Contrepet.

Although contrepèterie is mainly practiced by French adults rather than children, it shares with the language games of children (like pig Latin and other similar "secret" languages) the property of depending on sound rather than on spelling. In addition, it depends on the author and the audience sharing an understanding of what speech errors are like.

Many famous French writers -- Rabelais, de Vigny, Hugo, Jarry -- have devoted themselves to this form. As far as I know, this particular kind of word-play has never caught on in English-speaking countries, though perhaps the target word pairs in Motley's "sexual" condition might be considered as somewhat lame anglo-saxon contrepèterie.

Speech perception

The quality of human speech perception is astonishing. Even without conversational context, arbitrary isolated spoken words are perceived as the speaker intended about 98 times out of a hundred. In context, with decent sound quality, perceptual errors are even more rare.

Speech perception is not only very accurate, it is also very rapid. A spoken word unfolds in time over the course of perhaps half a second. Some clever experimental techniques have demonstrated that human speech perception normally keeps up with the flow of speech -- we recognize words as they are spoken, and often before they have been completely pronounced.

Speech perception can be studied on many levels, but in this lecture, we'll limit ourselves to a brief account of some of the work on the time course of spoken word recognition, and some of the factors that influence it.

Towards the beginning of a word's utterance, the acoustic evidence is consistent with many possible continuations. For instance, when we've heard the initial consonant cluster and the start of the vowel in the word bride, what we've heard is consistent with several other words, such as brine, bribe, and biar.

We can define the uniqueness point as the point at which the word becomes uniquely identifiable - i.e. no other words in the mental lexicon (a fancy word for dictionary) continue from that beginning.

t: tea, tree, trick, tread, trestle,

trespass, top, tick, etc.

tr: tree, trick, tread, tressle, trespass, etc.

tre: tread, trestle, trespass, etc.

tres: trestle, trespass, etc.

tresp: trespass (uniqueness point has been reached,

at least

as far as the stem morpheme is concerned)

A simple approach to charting the time course of word recognition is just to ask subjects to listen for a particular word in running speech, and to press a key when they hear it. Typical time values for this kind of task, for one- and two-syllable content words in normal utterance contexts, are about 250-275 milliseconds after the onset of the word. If we allow 50-75 milliseconds for the generation of the response (i.e. physically pressing the key), then an internal decision time of about 200 milliseconds (or one fifth of a second) from the word onset is estimated. Since such words are typically about 400 milliseconds long, this implies that the internal decision is generally taking place when only about half the word has been heard!

Does this mean that the internal decision is taking place at the uniqueness point? Actually, it seems that (in normal utterance contexts) words are usually recognized even before their uniqueness point! Of course, in doing so, the listener is taking a chance on being wrong -- presumably the gamble is motivated by the predictive value of the context.

There are several ways to estimate the uniqueness point of a word. One is to look in the dictionary to see what other words have pronunciations that start the same way, as we did above. Another, more direct method is the gating paradigm. This technique simply involves cutting the sound of the word off before it is finished, playing the incomplete word to listeners, and tabulating their guesses about what the word is.

The plots below show subjects' response to gated versions of two words that start the same way -- shark and sharp. These monosyllables are about 600 milliseconds long, because they were spoken carefully in isolation. As the plots show, reliable identification (16 out of 16 responses correct) is not achieved until near the end of the word's pronunciation, after the release of the final consonant has been heard.

At earlier points, the more common word ("sharp") is guessed more often no matter which is actually being heard. As more and more of the word shark is heard, "sharp" responses decrease and "shark" responses take over. When the word is actually sharp all along, "sharp" responses start out ahead, and gradually drive out the few "shark" responses.

These results show us that by half-way through the time course of the word, there is by no means adequate acoustic information to distinguish these words (much less after only 200 milliseconds). In an experiment looking at words in running speech, the average word recognition point was estimated to be less than 200 milliseconds after word onset, as usual, while a set of gating experiments showed that the average acoustic decision point for the same set of spoken words was more than 300 msec. after word onset. This is known as the "early selection" effect.

The graphs above show us something else -- subjects are "betting the odds" by choosing the more frequent word, in the absence of other evidence. This same pattern applies for words recognized in normal utterance context -- the context will bias the choice one way or the other, usually very strongly, even without acoustic evidence.

Here are a few sentence beginnings, chosen at random from the 1995 New York Times wire service, some continuing with "sharp" while others continue with "shark". Can you tell them apart?

- She once went deep-sea fishing , caught a

275-pound ___ . . .

The Kerrey-Danforth recommendations drew ___ . . .

Moon (29 of 52 for 292 yards, 2 touchdowns and 2 interceptions) wasn't ___ . . .

After writing about monsters like the cat-like dinofelis and Peter Benchley 's white ___ . . .

Dallas looked ___ . . .

Encountering a ___ . . .

I 've swam with billfish and every type of ___ . . .

A good high-speed jointer with ___ . . .

Some of them are a little tough -- for instance you could plausibly encounter a shark, or encounter a sharp object of some kind, but for the most part only one of the two word looks reasonable in the context. Thus once you've heard enough of the word to narrow the choice down, the context gives you a basis for guessing.

In the 52.8 million words of the 1995 New York Times newswire, "sharp" occurs 1422 times while shark only occurs 101 times. Thus in the absence of any evidence, either from sound or from context, the smart bet (roughly 14 to 1 odds) is "sharp" -- as indeed is shown by the responses of the subjects in the graphs above. Once enough acoustic evidence has come in that the 'cohort' of plausible words can be narrowed down roughly to "shark" or "sharp" (perhaps along with "shard" -- 14 occurrences in the 1995 NYT -- and a few other very uncommon possibilities), the subjects are giving roughly 14 times as many "sharp" responses as "shark" responses, even when the word will really turn out to be "shark". It turns out that people are exquisitely sensitive to this sort of frequentistic information about about their language -- not many people can make mathematically correct bets in poker or other card games, but every normal person can (unconsciously) "play the odds" to a couple of decimal places in understanding speech.

A natural hypothesis is that this educated gambling is what is responsible for early selection. Many experiments have supported this hypothesis. For instance, listeners respond to a word more slowly when it occurs in an implausible context ("John buried the guitar"), and even more slowly when it occurs in a semantically anomalous context ("John drank the guitar").

The increased lag-time in a response caused by semantic anomaly are generally rather small -- perhaps 50 msec. Overall there is a strong asymmetry in the role of "bottom-up" (acoustic) evidence and "top-down" (contextual) evidence. Good acoustic evidence will override even the strongest contextual preferences -- if someone says "John drank the guitar", then listeners will think the phrase is weird, but they will hear it nevertheless.

Responses slow down even further if the input is not even syntactically plausible -- "The drank John guitar", or worse. Experimenters sometimes call such quasi-random sequences word salad, because it is like the results of mixing up a bunch of words in a salad bowl, rather than using the rules of syntax to construct a real sentence. In word salad, the listener has nothing to rely on except the acoustic evidence and the effects of raw word frequency independent of context. Still, spoken word recognition under such conditions remains accurate and close in time to the uniqueness point.

All in all, the human speech perception system is amazingly well adapted to make quick decisions based on an optimal combination of all available information. This system is operating at an idle when listening to clearly-pronounced speech under reasonable acoustic conditions -- conditions in which computer speech recognition systems still make more mistakes than we would like. When the speech is slurred and erratic, when there is background noise or music or other people speaking at the same time, the system really shows its power. Under these conditions, where human listeners may still make out most of what is said without too much trouble, the performance of the best current computer systems degrades very rapidly. This remains an area of active research, where we know a lot about how well human perception works, but not very much about how it happens.

Mind and Brain

What we've just discussed is a taste of the vast amount we've learned over the past century or so about the mental processes of producing, perceiving and learning language. This knowledge is detailed and extensive, but in most cases, we do not know how the processes are actually implemented in the brain. Over the same period, we've learned a great deal about the localization of different linguistic abilities in different regions of the brain, and also about how neural computation works in general. However, our understanding of how the brain creates and understands language remains relatively crude. One of today's great scientific challenges is to integrate the results of these two different kinds of investigation -- of the mind and of the brain -- with the goal of bringing both to a deeper level of understanding.

As a concrete example of this mind/brain dichotomy, consider the following. From literally thousands of studies, we know that word frequency has a large effect on mental processing of both speech and text: in all sorts of tasks commoner words are processed more quickly than rarer ones, other things equal, as in the word recognition tests involving sharp and shark discussed above. However, we don't know for sure how this is implemented in the brain. Is "neural knowledge" of more common words stored in larger or more widespread chunks of brain tissue? Are the neural representations of common words more widely or strongly connected? Are the resting activation levels of their neural representations simply higher? Are they less efficiently inhibited? Surprisingly enough, there is no clear evidence about the relative contributions of these four different different kinds of brain mechanisms to the phenomenon of word frequency effects.

Again, psychological research tells us that there is also a strong recency effect: in all sorts of tasks, words that we've heard or seen recently are processed more quickly. Again, we don't know how the recency effect arises in the brain, nor do we know whether the brain mechanisms underlying the frequency and recency effects are partly or entirely the same. There is no lack of speculation on these questions, but we honestly just don't know at this point.

This simple example is typical. Very little of what we know about mental processing of speech and language can be translated with confidence into talk about physical structures and processes in the brain. At the same time, very little of what we know about the neurology of language can now be expressed coherently in terms of what we know about mental processing of language. For example, one of the most striking facts about the neurology of speech and language is lateralization: the fact that the one of the two cerebral hemispheres, usually the left one, plays a dominant role in many aspects of language-related brain function. However, we learn about this only by probing brain function directly -- looking at the symptoms of stroke or head trauma, injecting an anesthetic into the right or left internal carotic artery, imaging cerebral blood flow during the performance of certain language-related tasks, etc. There is nothing obvious in the behavioral or cognitive exploration of linguistic activity that connects to its cerebral lateralization.

The relation between mind and brain in general is an active "frontier" area of science, in which the potential for progress is very great. The neural correlates of linguistic activity, and the linguistic meaning of neural activity, are especially interesting topics. Reports of current research in this area are often presented at Penn, for example in the meetings of the IRCS/CCN Brain and Language group.

Functional localization of speech and language

Over the past couple of hundred years, most of what we know about how language is processed in the brain has come from studies of the functional consequences of localized brain injury, due to stroke, head trauma or localized degenerative disease. More recently, tools for "functional imaging" of the brain, such as fMRI, PET, MEG and ERP, provide a new sort of evidence about the localization of mental processing in undamaged brains. All of these techniques have their limitations, and so far they have mainly confirmed and refined earlier conceptions rather than revolutionizing them. However, over the next few decades these techniques promise enormous strides in understanding how the brain works in general, and in particular how it creates and understands language.

An excellent and detailed survey for a lay audience of what sorts of processing go on where in the brain, with some speculation about how and why, can be found in William H. Calvin and George A. Ojemann's CONVERSATIONS WITH NEIL'S BRAIN . If you are curious (and most people find the topic fascinating), you should spend some time reading either the on-line version or the published version of this book.

The taxonomy of language-related neurological problems, or aphasia, has been elaborated over the past decades. There are many named aphasic syndromes with clear instructions for differential diagnosis, and a plausible story about how these syndromes are linked to localization of language functions in the brain, and to injuries to various brain tissues. We'll return shortly to a more elaborated table of aphasic syndromes, with connections to diagnostic patterns and likely areas of brain damage, after looking in more detail at the two basic categories of aphasia that were identified by two 19th-century researchers, Paul Broca and Carl Wernicke.

Broca's Aphasia and Wernicke's Aphasia

As a National Institutes of Health information page says:

Broca's aphasia results from damage to the front portion of the language dominant side of the brain. Wernicke's aphasia results from damage to the back portion of the language dominant side of the brain.

Aphasia means "partial or total loss of the ability to articulate ideas... due to brain damage."

A note of caution: functional localization (the place in the brain where language functions are located) varies, sometimes considerably, across individuals. Brain injury (most commonly caused by stroke) is usually widespread enough to affect several different functional areas. Thus each patient is individual both in terms of symptoms and in terms of the correlation of symptoms to area of damage. Nevertheless, there are broad syndromes of deficit-associated-with-local-damage, as described succinctly in the NIH passage above, that are characterized as Broca's and Wernicke's aphasia.

Here is a somewhat more precise picture of the typical placement of Broca's area and Wernicke's area relative to various landmarks of cortical anatomy and physiology:

Broca's aphasia is sometimes called disfluent aphasia or agrammatic aphasia. It is named after Pierre-Paul Broca (1824-1880), a French surgeon and anthropologist who first described the syndrome and its association with injuries to a specific region of the brain.

Agrammatism typically involves laboured speech, and a lack of use of syntax in speech production and comprehension (although patients with agrammatic language production may not necessarily have agrammatic language comprehension).

An example of agrammatic speech:

Ah ... Monday ... ah, Dad and Paul Haney [the speaker] and Dad ... hospital. Two ... ah, doctors ... and ah ... thirty minutes ... and yes ... ah ... hospital. And, er, Wednesday ... nine o'clock. And er Thursday, ten o'clock ... doctors. Two doctors ... and ah ... teeth. Yeah, ... fine.Another example:

M.E.: Cinderella...poor...um 'dopted her...scrubbed floor, um, tidy...poor, um...'dopted...Si-sisters and mother...ball. Ball, prince um, shoe...

Examiner: Keep going.

M.E.: Scrubbed and uh washed and un...tidy, uh, sisters and mother, prince, no, prince, yes. Cinderella hooked prince. (Laughs.) Um, um, shoes, um, twelve o'clock ball, finished.

Examiner: So what happened in the end?

M.E.:Married.

Examiner: How does he find her?

M.E.: Um, Prince, um, happen to, um...Prince, and Cinderalla meet, um met um met.

Examiner: What happened at the ball? They didn't get married at the ball.

M.E.:No, um, no...I don't know. Shoe, um found shoe...

Here is a more detailed picture of the motor strip, showing what is sometimes called the motor homunculus, which is a depiction of how motor functions are localized along the motor strip. The portion adjacent to Broca's area controls the face and mouth.

In between the motor strip and Broca's area are the areas known as the

supplementary motor area (SMA) and the premotor cortex, which are said to

be involved in the generation of action sequences from memory that fit

into a precise timing plan. All in all, it seems likely that Broca's area

is connected to serialization of coordinated action of the speech organs.

Why do certain syntactic abilities also seem to be localized there?

Perhaps a neural architecture evolved for creating and storing complex

motor plans has been pressed into service to create and store symbolic

rather than purely motoric structures. As Deacon (1991) writes:

Human language has effectively colonized an alien brain in the course of the last two million years. Evolution makes do with what it has at hand. The structures which language recruited to its new tasks came to serve under protest, so to speak. They were previously adapted for neural calculations in different realms and just happened to exhibit enough overlap with the demands of language processing so as to make "retraining" and "reorganization" minimally costly in terms of some as yet unknown evolutionary accounting. Many of the structural peculiarities of language, its quasi-universals, and the way that it is organized within the brain no doubt reflect this preexisting scaffolding.

The second classical aphasic syndrome is named after the German neurologist Carl Wernicke (1848-1905).

Wernicke's aphasia is sometimes called sensory aphasia or fluent aphasia. The speech of a Wernicke's patient is often a normally-intoned stream of grammatical markers, pronouns, prepositions, articles and auxiliaries, except that the speaker has difficulty in recalling correct content words, especially nouns (anomia). The empty slots where the nouns should go are often filled with meaningless neologisms (paraphasia).

The patient in the passage below is trying to describe a picture of a child taking a cookie.

C.B. Uh, well this is the ... the /dodu/ of this. This and this and this and this. These things going in there like that. This is /sen/ things here. This one here, these two things here. And the other one here, back in this one, this one /gesh/ look at this one.Examiner Yeah, what's happening there?

C.B. I can't tell you what that is, but I know what it is, but I don't now where it is. But I don't know what's under. I know it's you couldn't say it's ... I couldn't say what it is. I couldn't say what that is. This shu-- that should be right in here. That's very bad in there. Anyway, this one here, and that, and that's it. This is the getting in here and that's the getting around here, and that, and that's it. This is getting in here and that's the getting around here, this one and one with this one. And this one, and that's it, isn't it? I don't know what else you'd want.

Wernicke's patients seem to suffer from much greater disorders of thought than Broca's patients, who often seem able to reason much as before their stroke, but are simply unable to express themselves fluently. However, their non-fluency causes them much frustration, and they are said to be unhappier than Wernicke's patients, who are often blissfully unaware that nothing they say makes any sense at all, and whose higher-level thinking processes are often as haphazard as their language is.

Wernicke's area is at the boundary of the temporal and parietal lobes, near the parietal lobe association cortex, where cross-modality integration (the integration of information coming from different senses, e.g. identifying something based on the combination of its visual appearance and its smell) is said to take place, and is adjacent to the auditory association cortex in the temporal lobe. Thus Wenicke's aphasia is sometimes called a "receptive" aphasia, by distinction with the "production" aphasia of the motor-system-related Broca's syndrome. However, as the above examples indicate, Wernicke's patients show plenty of problems in producing coherent discourse.

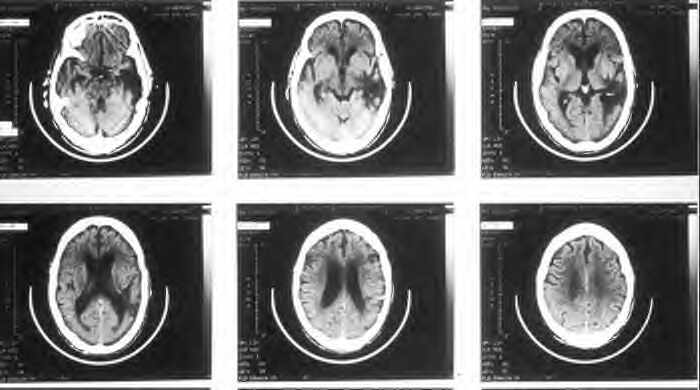

To give you some sense of what the injuries involved in this aphasic

syndromes are like, here is a photo of the excised brain of a Wernicke's

patient:

Here is a set of tomographic pictures of a different Wernicke's syndrome brain, showing a series of horizontal slices. The front of the head is towards the top, and the dominant (left) side is on the right, so it is as if we are looking at the brain from the bottom:

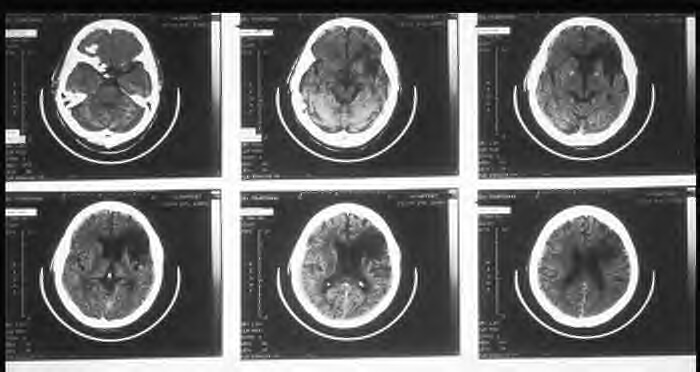

Here is a similar set of tomographic pictures of the brain of a Broca's patient:

The main point of these pictures: in typical cases of aphasia, the area of damage is rather large, and a wide variety of functions may be affected to one degree or another.

A more elaborated taxonomy

The table below shows the relationship of 8 named aphasic syndromes to six general types of symptoms:

| Fluent | Repetition | Comprehension | Naming | Right-side hemiplegia |

Sensory deficits | |

| Broca | no | poor | good | poor | yes | few |

| Wernicke | yes | poor | poor | poor | no | some |

| Conduction | yes | poor | good | poor | no | some |

| Global | no | poor | poor | poor | yes | yes |

| Transcortical motor |

no | good | good | poor | some | no |

| Transcortical sensory |

yes | good | poor | poor | some | yes |

| Transcortical mixed |

no | good | poor | poor | some | yes |

| Anomia | yes | good | good | poor | no | no |

Conduction aphasia generally results from lesion of the white-matter pathways that connect Wernicke's and Broca's areas, especially the arcuate fasciculus.

Global aphasia results from lesions to both Wernicke's and Broca's areas at once.

The motor and sensory variants of transcortical aphasia are produced by lesions in areas around Broca's and Wernicke's areas, respectively.

There are other syndromes as well, such as "pure word deafness", in which the patient can speak and write more or less normally, but is not able to perceive speech, even though other auditory perception is intact.

In actual clinical diagnosis, more elaborate batteries of tests are commonly given in order to assess language function in more detail, and the detailed locations of lesions can be found by MRI imaging.

Connecting mind and brain? the declarative/procedural model

Above we stressed the apparent dissociation between the phenomena of "language in the mind" and the phenomena of "language in the brain." We'll end this lecture with a brief presentation of an idea that ties the observations about brain localization of language to many other aspects of brain function, and at the same time makes contact with some of the most basic distinctions in the cognitive architecture of language. This idea has been proposed by Michael Ullman and his collaborators, under the name of the "declarative/procedural model."

Others have proposed a distinction between declarative memories and procedural memories. Declarative memory is memory for facts, like the color of a peach; procedural memory is memory for skills, like riding a bicycle. The declarative memory system is specialized for learning and processing arbitrarily-related information, and is based in temporal (and temporal/parietal) lobe structures. The procedural memory system is specialized for non-conscious learning and control of motor and cognitive skills, which involve chaining of events in time sequence, and is based in frontal/basal-ganglia circuits. Building on this earlier distinction, Ullman proposes that what we think of as lexical knowledge (the association of meaning and sound for morphemes, irregular wordforms and fixed or idiomatic phrases) is crucially linked with the declarative, temporal-lobe system, while what we think of as grammatical knowledge (productive methods for real-time sequencing of lexical elements) is crucially linked to the procedural, frontal/basal-ganglia system.

Ullman argues that declarative and lexical memory both involve learning arbitrary conceptual/semantic relations; that the knowledge involved is explicit, i.e. relatively accessible to consciousness; and that they involve lateral/inferior temporal-lobe structures for already-consolidated knowledge, and medial temporal-lobe structures for new knowledge. By contrast, procedural and grammatical memory both involve coordination of procedures in real time and computation of sequential structures; the knowledge involved in both tends to be implicit and encapsulated, so that it is relatively inaccessible to consciousness examination and control; and both involve frontal and basal ganglia structures in the dominant hemisphere.

We can see this as a detailed elaboration of the old observation that Wenicke's area is adjacent to primary auditory cortex, in the direction of visual cortex and cross-modal association areas, while Broca's area is adjacent to the portion of the motor strip that controls the vocal organs. The declarative/procedural model is supported by a wide variety of interesting, specific and sometimes unexpected evidence, coming from psycholinguistic studies, developmental studies, neurological cases, functional imaging studies and neurophysiological observations.

homework